PART 1: What is entropy?

About a year before this article's publication the YouTuber John Hammond did a sponsored video about passbolt. This video describes how passbolt works, how to install it on your own server and how to set your first user etc.

At a certain point, while typing a password we could observe some strange fluctuation in the entropy computation. Upon closer inspection, it becomes evident that the entropy momentarily drops after certain keystrokes, and only returns to its normal trajectory with the latest inputs.

This fluctuation was due to the entropy computation algorithm in use having the capability to discern whether the entered string is a password or a passphrase, enabling it to select the appropriate computation strategy and accurately determine the corresponding entropy. This fluctuation issue was solved, but it raises a question in my mind: knowing the entropy of a password could we break it?

About this blog series

This article is an opportunity to do some research about the sensitivity of the entropy information and try to answer the following question: "is this article title clickbait or not?". It aims at everybody with or without knowledge on the topics and tries to go into detail (such that it could be obvious for some person but everybody should understand without any background in the field).

For the story, I’ve been writing this article for a while and it grew and grew and grew. In the end, I decided to split it into multiple parts, as each of them deserve their own space.

- PART 1: What is entropy?

First, we'll define entropy and its importance in assessing password strength.

- PART 2: Insights from Entropy

Next, we'll examine what entropy reveals about data security, including potential vulnerabilities like shoulder-surfing.

- PART 3: Entropy Analysis: Passwords vs. Passphrases

We will analyse whether there is a significant difference in the entropy levels of passwords compared to passphrases, and what that means for security effectiveness.

What is entropy when we talk about passwords

The entropy of a password is the measure of its strength. It is expressed in `bits` and the higher the value is the stronger is the password. Let’s focus on how it is measured and why in bits?

What Wikipedia says

We need to understand some definitions of the entropy. According to these Wikipedia pages:

Entropy is a scientific concept that is most commonly associated with a state of disorder, randomness, or uncertainty.

Source: Entropy

In information theory, the entropy of a random variable is the average level of "information", "surprise", or "uncertainty" inherent to the variable's possible outcomes.

Generally, information entropy is the average amount of information conveyed by an event, when considering all possible outcomes.

The entropy is the necessary quantity of information the receiver has to determine without ambiguity what the source transmitted.

Source: Entropy (information theory)

These definitions might be hard to link with the strength of a password. However, we can already have a grasp of what could be the entropy. There are these common ideas where the entropy is tied to randomness, uncertainty and quantity of information conveyed or to recover.

What does it mean for a password

Let's imagine a scenario where a user has created a password for a service and a hacker/attacker tries to guess it. Without any knowledge of the password the attacker has to brute-force the password by trying all possible combinations of characters and password length. However, if the attacker has a bit of knowledge regarding the password (like knowing there are only digits used), then we can already guess that brute-force will be easier right?

The main reason is that with that information, the attacker knows some passwords will not work even without trying.

In other words the more an attacker knows about the password the less uncertainty there is about the password and the less information it has to recover or guess. That's where the measure of the entropy comes from.

⇒ The entropy of a password is the quantity of information an attacker has to guess in order to break a password.

How is it computed?

Let’s imagine that we are an attacker and we want to recover a password that is 10 characters long. Assuming our computers store data in bytes (8 bits per byte) and thus each individual characters we type are stored in a single byte (it can vary but we simplify with 1 byte), one can think that the quantity of information to recover is 8 ⨉ 10 = 80 bits would be the measure of the entropy in our case.

However, even though in some cases this answer would be right, in real life it’s mostly never the case. An attacker doesn't have to recover the 80 bits of information, it's less than that.

Symbols and and average quantity of information

To understand, we need to talk about the concept of symbols described in the wikipedia pages. A symbol is a possible value in a known set (i.e. 'A' is a symbol among the capital letters set). These symbols carry an average quantity of information (the information that the attacker needs to recover in our case).

In the previous statement, a symbol (or a character if you prefer) would carry 8 bits of information. 8 bits of information can encode up to 256 different values (2⁸) and if we could type on the keyboard as many characters, 80 bits of entropy would have been true.

⇒ Remember, the entropy is related to the uncertain information, the information to recover.

On a keyboard that uses latin letters like a Qwerty or Azerty keyboard, we would type characters like upper case, lower case, digits, space, punctuation etc. It's way less than 256 different values. With all letters, digits, punctuation and "special" characters, we usually consider 92 different characters only. Here, the uncertainty is on 92 possibilities and not 256.

To know how many bits is required to encode 92 symbols we would need to resolve a small equation. The different possible value 8 bits can encode is given by 2⁸ = 256 (where 2 is the number of values a bit can take). Based on that idea, we can try to find the number of bit that is required to encode 92 values:

Start with: 2ˣ = 92

Use a log base 2 on both sides: log₂(2ˣ) = log₂(92)

x now can be extracted: x = log₂(92)

Solve for x: x ≈ 6.523 bits

In this condition a symbol would need 6 to 7 bits to be encoded. On average for 92 symbols, we need 6.523 bits to encode the information. It means that if we use 92 symbols, the average quantity of information the symbol carries is 6.523 bits. An attacker has to recover 6.523 bits of information per character in a password.

So for a 10 character long password that uses up to 92 symbols the entropy is 10 times the average quantity of information a symbol carries:

10 · log₂(92) ≈ 65.235 bits

With the “full” set of characters, a 10 characters long password has actually 65.253 bits of entropy and not 80 bits. One can notice that it depends on the count of symbols. If a service asks for a password that only contains digits (10 symbols) the entropy of a 10 character long password would be:

10 · log₂(10) ≈ 33.219 bitsReducing the quantity of available symbols reduces the uncertainty of their usage and thus the entropy.

In conclusion

The entropy of the password represents the quantity of bits an attacker has to recover to break a password, and it is somehow proportional to the size of the password and the size of the character set used. By requiring the inclusion of specific characters in a password, services are requesting an expansion of the character set, thereby enhancing the password's entropy.

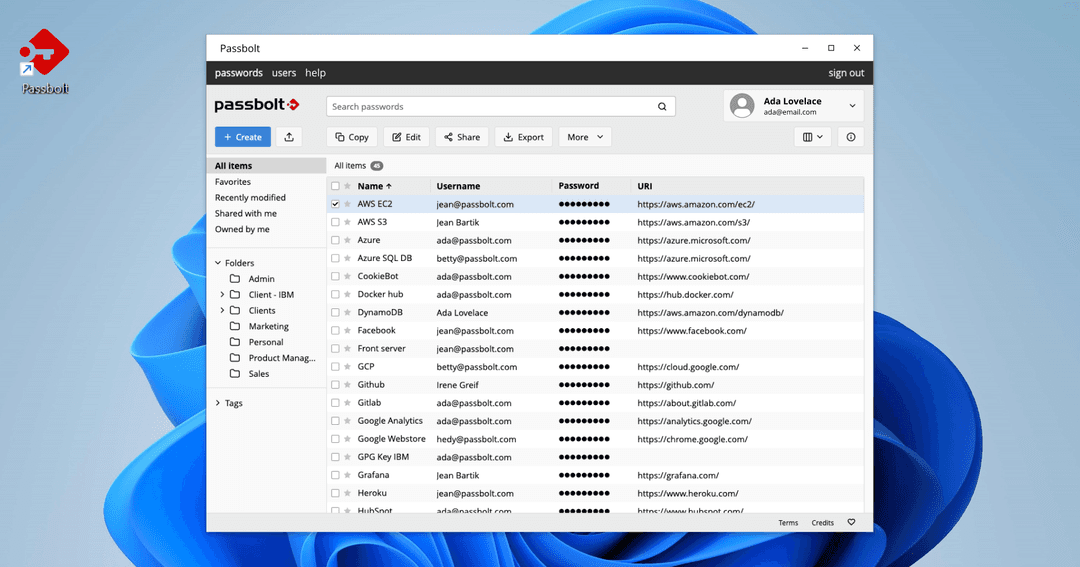

One can think that a higher entropy means a stronger password and while this is correct, the idea has to be taken carefully. You might have heard stories about people having their passwords written on sticky notes for everybody in the office to see (if you do that, it’s time to halt this practice and consider using passbolt! :D). Even though the password would be considered strong, its disclosure makes its entropy drop to zero. An attacker reading the paper does not need to guess anything as there is no uncertainty anymore.

Furthermore, a strong password could be leaked in a known dictionary and in this condition its entropy is also considered as null, as the remaining possibilities become a negligible fraction of the total possibilities. Passbolt addresses this issue by ensuring passwords are not part of an exposed data breach, by using services such as ';--have i been pwned?, prior to their creation.

Determining what strength a password should have is not straightforward. It depends on various factors such as the sensitivity of the protected information and where the password will be used. For instance, credit card PINs are typically just 4 to 6 digits long, which means they have very low entropy and could technically be brute-forced in a fraction of a second. However, considering they're paired with something users must carry around, and thanks to protective measures limiting the number of attempts, the security and usability is balanced.

If you're still uncertain about the ideal strength for your password, don't fret, there are recommendations about password minimum strength made by organisation experts in matters of security. According to OWASP and NIST at the time of writing, a minimum password length should be 8 characters, under this threshold they considered a password weak (it’s under 52.19 bits of entropy).

Passbolt advises using passwords having a higher entropy, especially for services with unknown password processing methods. A minimum of 80 bits of entropy is enforced on the password generation, while 112 bits is recommended.

That’s it for this part, now you know enough about what entropy is to tackle the incoming part. In the next article we will explore the information we can extract from knowing the entropy of a password and see if we could break the secret knowing its strength.